LAS VEGAS – When can a "porch pirate" be a dog, cat or a blue Honda Accord instead of the package thief they really are? The answer is when the AI-enabled security camera used to protect your home is hacked, tricked and pwned.

Researchers here at DEFCON Friday demonstrated how a compromised consumer-grade Wyze security camera can be manipulated by an adversary to make it “think” a home intruder is a dog or an inanimate object.

[For up-to-the-minute Black Hat USA coverage by SC Media, Security Weekly and CyberRisk TV visit our spotlight Black Hat USA 2024 coverage page.]

“Increasingly webcams are shipping with AI built into them so they can be configured to send you activity alerts. The AI is used to know the difference between a dog wandering around in your kitchen versus a burglar,” said Kasimir Schulz, principal security researcher with HiddenLayer.

Schultz and co-author Ryan Tracey, principal security researcher at HiddenLayer presented their research “AI’ll Be Watching You“ at DEFCON Friday.

What are edge AI devices?

Their research was meant to focus less on the Wyze Cam V3 model cameras they hacked and more on what are called edge AI devices. Edge AI products ship with AI capabilities built into them, so they can process data locally on the device if needed. By using the device’s local compute power data is not sent to a more robust cloud-based data center.

Relying on edge AI, in this case, has its tradeoffs, Schulz said. “On one hand it’s great for privacy because your data is never shared with a third party,” he said. The drawback is that devices – especially consumer-grade gadgets – typically don’t have the local compute-power needed for robust AI processing.

Schulz said it’s typical for webcam makers to ship hardware with basic AI functions and push premium cloud services.

Hack gives researchers the AI edge on webcams

For their report, researchers focused on the popular Wyze Vx Pro line of home security cameras that boast person detection and alert capabilities.

Research into the camera was exhaustive, with the goal to understanding how the camera’s integration of AI worked, how it processed data and how visual information was used to calculate the accuracy of identifying things properly.

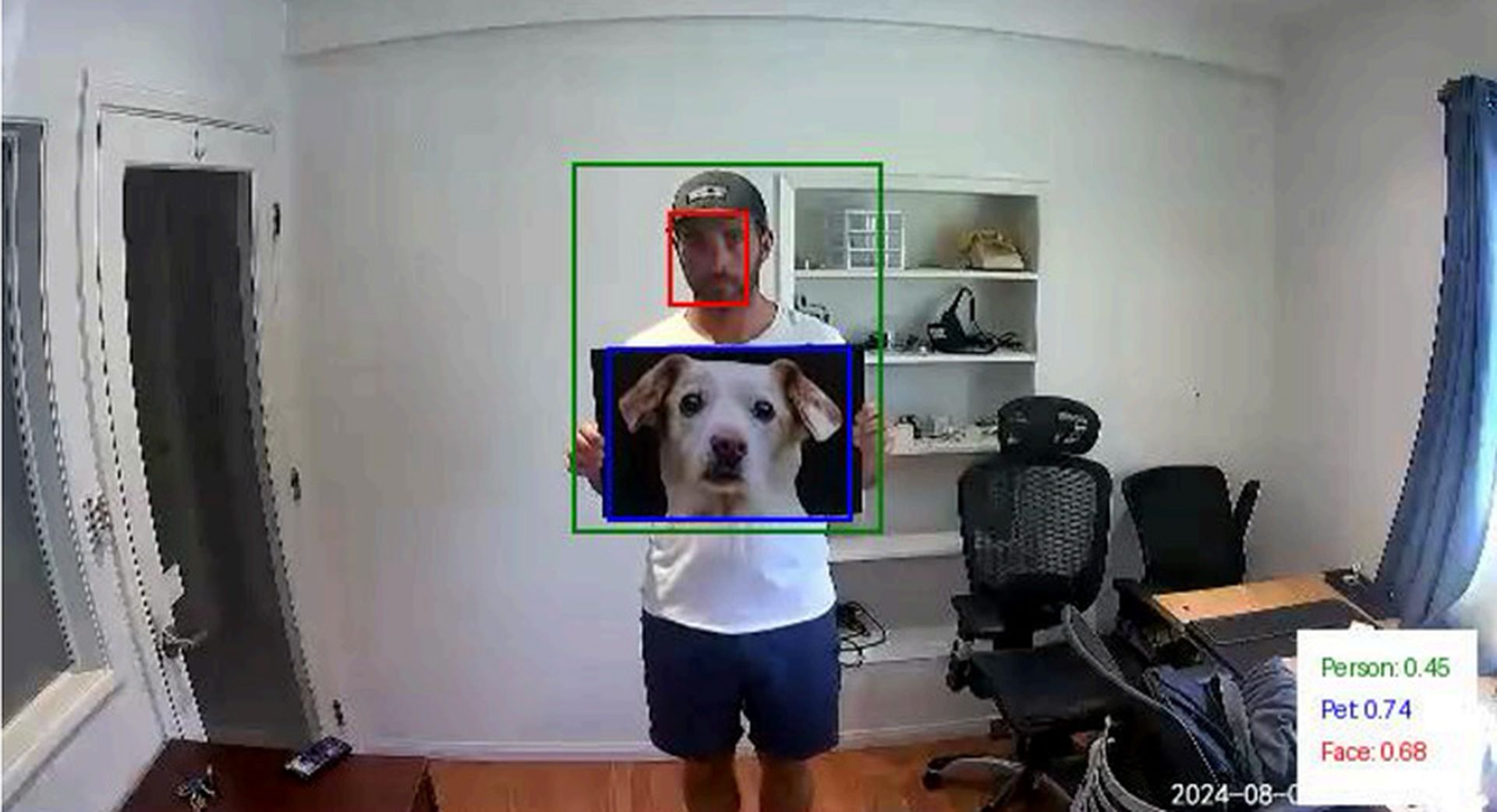

In an oversimplified nutshell the camera’s edge AI intelligence put objects in boxes, examined the edges or outlines of the boxed object and scored with a level of certainty whether the camera was looking at a dog, carboard box or a human face. When the camera achieved a certain level of certainty it was a face, it would notify the camera owner a person was detected within the field of view of the camera.

Researchers were able to pull off two impressive hacks. One was to manipulate the firmware of a camera using a known exploit (and one bug HiddenLayer found CVE-2024-37066) to confuse the edge AI enough to not recognize a face with enough confidence to trigger an alert to the homeowner.

Researchers were able to manipulate the firmware to view a human face and identify it as a box.

HiddenLayer calls this type of manipulation an “inference attacks.” That’s because the edge AI uses inferences to score the probability that an object is what it thinks – or not.

Researchers outlined a second type of inference attack where a large-sized image of a dog held below the chin of a human subject confused the edge AI. Consequently, Wyze’s inference score wasn’t high enough to trigger an alert to the homeowner of an intruder. However, Wyze did recognize the dog.

Edge AI alarm

Both researchers underscore that their Wyze research if too impractical to present a threat to smart webcam owners.

“Our research into the Edge AI on the Wyze cameras gave us insight into the feasibility of different methods of evading detection when facing a smart camera,” wrote HiddenLayer researchers. “However, while we were excited to have been able to evade the watchful AI (giving us hope if Skynet ever was to take over), we found the journey to be even more rewarding than the destination.”

They noted that their two objectives were met. “One, to understand how the camera worked to identify, classify and score with confidence objects. The second objective was to modify and - in some cases - replace the Wyze camera firmware in order to force Wyze to do things such as identify a human face as a large box.”

[For up-to-the-minute Black Hat USA coverage by SC Media, Security Weekly and CyberRisk TV visit our spotlight Black Hat USA 2024 coverage page.]